THE AI REGULATION CHRONICLES 2 Big Tech Chatbots race for the money

n April 2023, Alphabet CEO Sundar Pichai claimed that artificial intelligence would have an impact “more profound” than any other human innovation, from fire to electricity.

This is an astonishingly wrongheaded claim from Alphabet, a company that knows a good deal about AI and really should know better. For that reason, it is the current frontrunner in our 2023 AI Absurdities contest. It does however still have plenty of competition, not only from shameless vendor hype for dazzling selections of AI marvels but also frightening predictions of an "AI Apocalypse.".

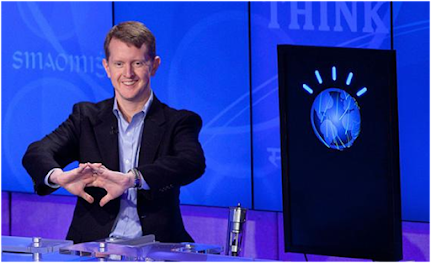

In these AI Regulation Chronicles, we ended our first chronicle –“Background: from Watson to AlphaGoZero and into the wacky world of ChatGPT “- with the breaking news of the resignation from Google of the esteemed Dr. Geoffrey Hinton, often called “the godfather of AI”. While Dr Hinton’s invention of Artificial Neural Networks with backpropagation enabled amazing progress in AI, he wanted to speak out about the great risks he saw in the development of dangerous human level AI systems – in particular the Holy Grail of AI, an Artificial General Intelligence (AGI) agent with the ability to “understand” or learn any intellectual task that a human being can.

The eminent gentleman didn’t lose any time in making public his views of the existential danger of a super intelligent AGI that could possibly run amuck and destroy humanity. Dr Hinton told Reuters in an interview in early May that AI is a "more urgent threat to humanity than climate change". He added "With climate change, it's very easy to recommend what you should do: you just stop burning carbon.........." (Well, why didn't anyone else think about that?)

In any case, according to Reuters, "He is now among a growing number of Tech leaders publicly expressing concern about the possible threat posed by AI if machines were to achieve greater intelligence than humans and take control of the planet."

We will come back to this “existential risk” issue later in this series. For now let’s pick up where we left off in the first chronicle: the rapid worldwide success of ChatGPT, an astonishingly capable Large Language Model (LLM) but with an unfortunate tendency to make mistakes (15% to 20% on simple queries) and a strangely creative penchant for “making stuff up” when it cannot find an answer.

Big Tech and the frantic race for LLM market share

In this situation, one might think that industry players would take some time to think about the obvious risks of this erratic, error prone LLM technology before going all in.

One might also be dead wrong.

Serious money was put on the table, when Microsoft – already an OpenAI investor - announced an additional 10 billion dollar investment and its intention to integrate the new service into all its offerings, including of course Search with the rapid introduction in beta of Bing AI built on Open.AI technology.

Google blows a fuse

It’s fair to say that when Google got word of this deal, it blew a fuse. In Q4 2022, Google’s revenue totaled $76 billion, of which $42.6 billion (or 56%) was from search ads. The Microsoft/OpenAI combination was perceived as a mortal threat to that stable revenue stream and to hundreds of billions in market cap.

A panicked Google rushed out “Bard”, based on its own LaMDA (short for “Language Model for Dialogue Applications”) which the company had been “holding off on releasing … knowing it wasn’t fully ready for prime time.” In fact, in mid 2022 Google had to deal with an engineer who thought LaMDA was “sentient”. And in case there were any doubt his views were being expressed without hyperbole, he went on to tell Wired, "I legitimately believe that LaMDA is a person." Google fired him when he went public with his wacky opinion, and in our view rightfully so.

Throwing caution to the winds, Google moved to introduce Bard early February in a slick promotional video at a company event. In the video, the generative AI chatbot is asked, “What new discoveries from the James Webb Space Telescope can I tell my nine-year-old about?” Bard answers with several bullet points, one of which reads, “JWST took the very first pictures of a planet outside of our own solar system.” That was quite an error for such a smart AI!

In fact, “the first-ever image of a planet outside our own solar system was taken in 2004 by the European Southern Observatory’s Very Large Telescope (VLT)”.This mistake was both embarrassing and very costly, to say the least. More broadly, Google employees told CNBC that “the Bard announcement (was) “rushed,” “botched” and “un-Googley,”

The bottom line of this hasty fiasco!: Shares for Alphabet, Google’s parent company, subsequently fell 7.7%…resulting in over US$100 billion in lost market value.”

Enter Microsoft Bing AI (aka Sydney)

Meanwhile, Microsoft rushed Bing AI to market (the operative word used internally was “frantic”). With this in mind, the well known Tech luminary Eveline Ruehlin (@enilev) wrote on Twitter something we all should have known already: “Technology is being developed too quickly and without enough testing. Developing AI without regulation can be dangerous.”

Experience with Bing AI (or”Sydney” as it sometimes calls itself) offers some good examples:

· According to CNBC: “Beta testers with access to Bing AI have discovered that Microsoft’s bot has some strange issues. It threatened, cajoled, insisted it was right when it was wrong, and even declared love for its users.”

· “Microsoft's new AI-powered Bing chatbot is making serious waves by making up horror stories, gaslighting users, passive-aggressively admitting defeat, and generally being extremely unstable in incredibly bizarre ways.”

· New York Times columnist Kevin Roose wrote that when he talked to Sydney (aka Bing AI), the chatbot seemed like “a moody, manic-depressive teenager who has been trapped, against its will, inside a second-rate search engine.” (See transcript in figure below)

OpenAI ups its game but closes off its research

Now, if you thought things couldn’t get any wilder, just wait… Meet GPT-4!

In Ars Technica on March 14, 2023, we can read: “On Tuesday, OpenAI announced GPT-4, a large multimodal model that can accept text and image inputs while returning text output that "exhibits human-level performance on various professional and academic benchmarks," according to the company. It is also said that GPT-4 is 100 times faster than its predecessor.

On March 15th, Ars Technica published a follow-up piece entitled somewhat provocatively “OpenAI checked to see whether GPT-4 could take over the world.” We’ll skip the details, but the answer seems to be NO, which should be a relief for people who lose sleep over an “AI existential danger”.

According to an OECD article, “Its power is immense and its capabilities are very attractive. But the mistakes it makes are fundamentally the same … it may hallucinate, it may be subject to attacks and adversarial prompts that cause it to say socially unacceptable things, and it may contain social biases because of the informational distortions in the documents it processes.”

In fact, in the Misinformation Monitor, “a NewsGuard analysis found that the chatbot operating on GPT-4, known as ChatGPT-4, is actually more susceptible to generating misinformation — and more convincing in its ability to do so — than its predecessor, ChatGPT-3.5…. While NewsGuard found that ChatGPT-3.5 was fully capable of creating harmful content, ChatGPT-4 was even better.”

OpenAI does seem to be aware of this issue, warning that “Counterintuitively, hallucinations can become more dangerous as models become more truthful, as users build trust in the model when it provides truthful information in areas where they have some familiarity.”

This is admittedly useful to know, even if OpenAI – which is no longer "open software" - has not been exactly transparent about GPT-4. Some people in the IT Industry have call the company “OpaqueAI”.

In fact, OpenAI is now facing a consumer market complaint with the Federal Trade Commission (FTC) by the nonprofit Center for Artificial Intelligence and Digital Policy. According to the CAIDP complaint, GPT-4 poses many types of risks, and its underlying technology hasn't been adequately explained. "OpenAI has not disclosed details about the architecture, model size, hardware, computing resources, training techniques, dataset construction, or training methods...The practice of the research community has been to document training data and training techniques for Large Language Models, but OpenAI chose not to do this for GPT-4."

Even so, we do admire the clairvoyance of OpenAI CEO Sam Altman (@sama, figure below) who warns that “as AI continues to evolve, the risks grow alongside its capabilities….We've got to be careful here." He added «I think people should be happy that we are a little bit scared of this.”

Frankly, “happy” is not exactly the word we would have chosen for our reaction to this opaque, risky and entirely unregulated work, by private companies ... but at least we’ve been warned.

.jpg)

Comments

Post a Comment