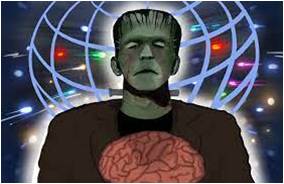

AI Regulation Chronicles --- EXTINCTION RISK: the AGI Frankenstein scenario

Hundreds of leading figures in artificial intelligence", according to the Financial Times, issued a statement in May 2023 describing the existential threat that the technology which they helped to create poses to humanity. “ Mitigating the risk of extinction from AI should be a global priority ,” it said, “alongside other societal-scale risks such as pandemics and nuclear war.” A shame they forgot climate change, which we expect you have noticed is already arriving! So, what’s your p(AI doom)? After the shock of the extinction risk statement, the West Coast AI community immediately became embroiled in heated conversations about doomsday scenarios with a runaway superintelligence . Symptomatic of this Silicon Valley excitement is a sort of fashionable “parlor game” called P(AI doom) , in which a participant provides a personal estimate of the "probability" of the destruction of humanity by a genocidal AGI. On a Hard Fork podcast ,in late M...