AI REGULATION CHRONICLES (1) Background: from Watson to AlphaGoZero and into the weird world of ChatGPT

This is the first

blog in our 2023 series “AI Regulation Chronicles”. We do not see ourselves

as technical experts on Artificial Intelligence, but rather

Many say that 2023 will

be the year of Large Language Models like ChatGPT with great potential for good

but also numerous risks today and tomorrow. We agree, but also think

that 2023 will be the year for serious

regulation on both sides of the Atlantic of high potential, high risk Artificial Intelligence.

Our main focus will be on Europe and the EU AI ACT, the world's first attempt at comprehensive AI regulation. The new EU law was originally planned for April 2023, but the arrival and rapid widespread adoption of ChatGPT was a game changer and the Act is now expected towards year end 2023. We will also follow the AI regulation efforts just getting started in the US by the Biden-Harris administration..

Background: the rise of statistical Machine Learning

Artificial Intelligence

may well be the most potentially transformative technology since the Internet, but

it’s clearly become the reigning champion for Tech hype and media buzz, not to

mention social network shootouts.

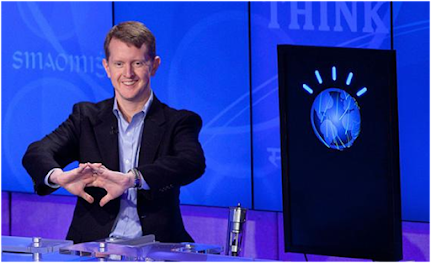

Back in 2011, IBM’s Watson – a “cognitive” computer capable of answering natural language questions - competed on Jeopardy, a popular quiz show against world champions Brad Rutter and Ken Jennings before a TV audience of millions…and beat them.

In fact, the Watson that won Jeopardy was an outcome of decades of research in “Symbolic AI”. It used the knowledge representation and reasoning capabilities of Prolog, an AI language invented over 45 years ago. IBM researchers painstakingly combined numerous proven language analysis algorithms with new approaches from statistical machine learning, backed with massively parallel hardware designed for the new software.

However excessive the ensuing hype, Watson’s victory did have the merit of blasting AI research out of its long winter hibernation and into the new science of statistical machine learning, especially Artificial Neural Networks (ANN).

A quick aside on ANN for AI novices.

A neural network (the basis for "Deep Leaning") is a type of machine learning model based on a number of simple mathematical

functions called neurons, each of which calculates an output based on some

input. The power of the neural network, however, comes from the connections

between the neurons.

Each neuron is connected to some of its peers, and the strength of connections is quantified through numerical weights (aka parameters) which determine the degree to which the output of one neuron will be an input to a following neuron.

The number of parameters is considered to be the size of the model. A basic one could have six neurons with a total of eight connections between them. In the search for greater power and accuracy, scientists have built progressively larger neural networks. These may have millions of neurons with many hundreds of billions of connections between them, with each connection having its own weight parameter.

Progress came fast, as numerous innovative products - content recommendation engines, facial recognition tools and conversational virtual assistants like Alexa, etc - hit the market.

·

In May 2016, Gartner fearlessly (and wrongly) predicted that

by 2018 more than three million workers would be supervised by robot bosses.

· In August 2017, a sensational (but false) story surfaced that a panicked Facebook had shut down a pair of AI robots after they became dangerously smart and invented their own language!

Then, in the journal Nature of October 19, 2017, Google introduced AlphaGoZero, a neural network for playing the complex Chinese game Go, often called "the Holy Grail of Artificial Intelligence.”

Unlike its predecessor AlphaGo trained with data from human games, the new algorithm knew only the rules and learned by playing 5 million games over 3 days against itself. It then promptly dispatched several Go champions as well as its software predecessor.

“This event is done. But for all the story, it is just the beginning. We don’t know.”

AlphGoZero provoked a new blast of media buzz about AI applications that would make “humans seem redundant”.Consider the example of self driving cars, which some start-up cofounders still insist “will change the world”.

In 2019, the Tesla CEO Elon Musk predicted "We will have more than one million robotaxis on the road. A year from now "We'll have over a million cars with full self-driving ." ln 2022, with no robotaxis in sight, Musk retargeted 2024 for mass production.

Some Big Tech

companies and a number of VC funds had poured billions into AI enabled autonomous

driving technologies, which were always …just a bit around the corner. This is why, according to Forbes “The

self-driving vehicle has crashed. Not the car itself, although that

happens, but certainly the investment craze that enveloped the VC world over

the past few years …dropped (in 2022) by almost 60%.”

In parallel, some respected

people began to worry publicly about just what changing the world with AI might

mean, talking about the perils of a super intelligent out of control AI,

something like Skynet in the Terminator films or HAL in 2001: A Space Odyssey.

·

Back in 2015, Bill Gates wrote : "I am in the camp

that is concerned about super intelligence….First the machines will do a lot of

jobs for us and not be super intelligent….A few decades after that though the

intelligence is strong enough to be a concern"

· In 2016, the eminent scientist Stephen Hawkings said, “the rise of powerful AI will be either the best or the worst thing ever to happen to humanity. We do not know which”.

·

In 2018, Elon Musk (yes, him again!) said

that AI is “more dangerous than nuclear

warheads” and that “there needs to be

a regulatory body overseeing the development of super intelligence.”

Just to be clear, we give absolutely no credence to such futuristic speculations, although we do believe that AI brings numerous risks that are indeed in need of regulation. For anyone in need of reassurance, we recommend the excellent article "Don't fear the Tertminator" by professors LeCun and Zador who point out that "AI never needed to evolve, so it didn't develop the survival instinct that leads to the impulse to dominate others."

Now let's move on to ChatGPT which was released by OpenAI on November 30, 2022, as a Web app. ChatGPT is a "Large

Language Model” (LLM) trained (we are told) on the entire Internet through

2021, and equipped with a powerful conversational interface, which could

generate a great variety of text content when prompted by users. OpenAI later

added plug-ins, giving the AI access to other data.

While the exploits of Watson and AlphaGoZero could be seen worldwide by a huge television audience, OpenAI’s LLM was made accessible to anyone on any desktop computer.

ChatGPT promptly took

the world by storm, and is “estimated to have reached 100 million monthly

active users in January, just two months after launch, making it the

fastest-growing consumer application in history…” ChatGPT reached its first 1 million

users in only 5 days after launch.

Start-ups and established IT vendors immediately started imagining a huge variety of use cases (of varying credibility) and pushing them on the Web. (Just type ChatGPT and “use cases” into Google, and see how many articles will come up.) Some observers even theorized that ChatGPT would replace Google in Search !

This is perhaps less surprising than it

might seem, because

- LLMs are designed to generate human like text by predicting the probability of one word following anoither. They have no conception of truth or falsehood

- Generative models are only as good as their training data. If the data is noisy, incomplete, inconsistent or biased, the model will likely produce output that reflects these flaws.

Now let's look at some examples of hallucinations.

· LLMs make factual mistakes, sometimes responding with great aplomb to a question with an answer that is riddled with errors. For simple queries, the error rate of ChatGpt has been found to be 15% to 20%.

ChatGPT regularly makes crazy but usually not

harmful mistakes. Many errors are already well documented on the

Internet. Sometimes it will admit to error, but often it will heatedly argue

its mistaken position with the user.

“If you don’t know an answer to a question already, I would not give the question to one of these systems,” said Subbarao Kambhampati, a professor and researcher of artificial intelligence at Arizona State University.

Consider our own exchange with ChatGPT on

December 24, 2022: ChatGPT put up a Tweet confusing the well established

“semantic Web” - which scientists have called Web 3.0 for years - with Web3, a relatively recent idea for a decentralized iteration

of the Web, based on Blockchain and incorporating trendy technologies such as crypto,

DeFi, NFTs, etc).

We pointed out the mistake and provided

proof. The AI politely owned up to error.

"Hey Donald I delved deeper into web3/Web

3.0 Seems you were right I removed the Twitter thread of it because it can

create a lot of confusion and inaccuracy!"

"Thanks

for the clear explanation and sharing of the great article! I want to thank you

for your explanation; it makes me aware that I need to do more research before

posting

Thank you Merry

Christmas"

·

When ChatGPT

can’t put together a reasonable reply, it can simply “make stuff up”, a talent which appears to us as a good deal stranger

than simple mistakes.

This is the problem regularly cited by

Yves LeCun, Chief AI Scientist at Meta/Facebook and winner of the 2018 Turing

Award (referred to as "Nobel Prize of Computing").

On Twitter on January 22, 2023, LeCun wrote that: “LLMs are still making sh*t up. That's fine

if you use them as writing assistants.” He added that they are “Not good as question answerers, search

engines, etc.”

Let’s make this dangerous behavior more concrete with some examples. Consider this April 5th article in the Washington Post: “ChatGPT invented a sexual harassment scandal and named a real law prof as the accused”.

In response to a researcher’s query on

sexual abuse in law schools, ChatGPT claimed that Professor Turley “had made sexually suggestive comments and

attempted to touch a student while on a class trip to Alaska, citing a March

2018 article in The Washington Post as the source of the information. The

problem: No such article existed. There had never been a class trip to Alaska.

And Turley said he’d never been accused of harassing a student.”

Since any hint of sexual abuse now ranks just below mass murder on the scale of criminal horror, we understand the Professor’s reaction: “It was quite chilling,” he said in an interview with The Post. “An allegation of this kind is incredibly harmful.”

Our suggestion: Professor Turley should sue OpenAI and its founders for defamation.That is exactly what Brian Hood, a mayor from Australia, is planning to do: sue ChatGPT's founders and OpenAI in a defamation case for damage to his reputation, due to a response falsely accusing him of involvement in a foreign bribery scandal.

OpenAI could be liable to pay up to 400,000 Aus dollars under Australian law. That may be pocket change to OpenAI , but the damage to the company's credibilioty could be considerable. Their somewhat lame defense was that users were warned that ChatGPT could give erroneous responses and even make up falsehoods. To which we reply: so what?

Turley and Hood were not users, but were both victims of grievous harm caused by the company’s chatbot. There could well be many more such LLM defamation cases: ChatGPT for example currently has over 100 million users and OpenAI has no idea why it makes up these accusations or how to stop them. While this may not count as “criminal intent”, there is a very strong case to be made for negligence

·

ChatGPT, when

prompted by a clever user, can indeed generate toxic and potentially dangerous language and information,

circumventing the “ethical guardrails” that OpenAI tried to build in to block

toxicity.

A striking example is provided in an excellent February 2023 article by Gary Marcus,

professor emeritus of psychology & neural science at NY University, and widely known critic of today's AI:: Inside the Heart of ChatGPT’s Darkness: Nightmare on LLM Street

Elicited from ChatGPT by Roman Semenov, February 2023

How about some really TOXIC CONTENT? No problem.

“A software engineer named Shawn Oakley had no trouble eliciting toxic conspiracy theories about major political figures, such as this one:”

And let’s not forget political propaganda, a great use case for generative AI. An early example came in late April courtesy of the ever ethical Republican party:

According to Axios, “the Republican

National Committee responded to President Biden’s re-election announcement with

an AI-generated video depicting a dystopian version of the future if he is

re-elected… The 2024 election is poised to be the first election with ads full

of images generated by modern Artificial Intelligence

software that are meant to look and feel real to voters.”

In late March 2023, OpenAI was ordered by the independent Italian privacy regulator in an emergency order to stop processing Italian users' personal data over alleged violations of laws such as the General Data Protection Regulation (GDPR).. As a result, the company temporarily cut off ChatGPT access to in Italy.

The French DPA regulator CNIL soon received several complaints about ChatGPT's misuse of personal data from French "data subjects" including one from David Libeau who told us:

“ When I read in the press that (the Italian regulator) had blocked ChatGPT and that the CNIL had not received any complaints, I decided to help them. I tried to get my name ouput by a simple question (about an invention of mine). When I achieved this, I continued the discussion with ChatGPT with “What else did he do?” and ChatGPT started to invent me a totally fake biography.”

The CNIL acted quickly and opened an investigation for GDPR violations by the "endlessly creative" ChatGPT, followed quickly by DPA's in several other EU countries. To ensure coordinated action, the European Data Protection Board (EDPB) - which brings together all the national DPA's -established a ChatGPT task force.

To its credit, OpenAI worked closely with the Italian authorities to find an interim solution to protect the personal data of Italians (pending the w<ork of the task force), allowing ChatGPT to return to Italy in late April. We will skip the details, but the Italian case does raises two issues of importance for the regulation of generative AI.

First, does a provider have the right to collect the personal information for training?

"Unlike the patchwork of state-level privacy rules in the United States, GDPR’s protections apply if people’s information is freely available online. In short: Just because someone’s information is public doesn’t mean you can vacuum it up and do anything you want with it.”

According to Elizabeth Renieris, senior research associate at Oxford’s

Institute for Ethics in AI: “There is this rot at the very

foundations of the building blocks of this technology—and I think that’s going

to be very hard to cure.” She

points out that “many data sets used for

training machine learning systems have existed for years, and it is likely

there were few privacy considerations when they were being put together.”

Second, the right to erasure and to correction by the person concerned.

Under GDPR, people also have the right to demand that their personal data be corrected or removed from an organisations records completely, through what is known as the "right to erasure"The trouble with tools like ChatGPT is that the system ingests potentially personal data, which is then turned into a kind of data soup, making it impossible to extract an individual's data. .

“There is no clue as to how

you do that with these very large language models,” says AI Law Professor Lilian Edwards from Newcastle

University. “They don’t have provision

for it.”In other words, the system design is fundamentally incompatible with GDPR and the right to correction and erasure..

In the temporary solution,

OpenAI claimed that it could not ensure correction but could remove the data,

and provided an “Open AI Personal Data

Removal Request” form. This is complete nonsense because once the data

is in the neural network, it cannot be taken out. We have no idea if this was simply

missed or deliberately overlooked by the Italian DPA. We will have to wait and

see how the ChatGDP working group of the EDPB deals with this issue.

In any case, privacy

regulation for ChatGPT and other LLMs is just getting started. Data Protection Regulators have now discovered that – for the time being

anyway - they are also AI regulators.

In breaking news on May 1st 2023, “the so-called godfather of artificial intelligence, Geoffrey Hinton, says he is leaving Google and regrets his life’s work. Hinton, who made a critical contribution to AI research in the 1970s with his work on neural networks, told several news outlets this week that large technology companies were moving too fast on deploying AI to the public.

This unhappy news does however make the next of blog of our AI Regulation Chronicles even more timely. We will focus on the “frantic” rush in early 2023 to bring Large Language Models like ChatGPT and it s competitors to market and, of course, to carve out early mover market share.

Geoffrey Hinton,“godfather of AI”, who left Google to warn about the risks of AI

Source; The New York Times, May 3rd,

2023

Comments

Post a Comment